UK security agencies unlawfully collected data for 17 years, court rules

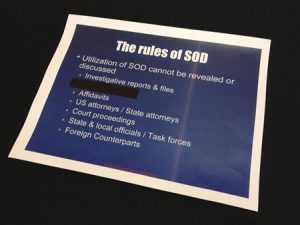

No prosecutions. Instead, those in power are pushing to pass a law to legitimise and continue the same.

“British security agencies have secretly and unlawfully collected massive volumes of confidential personal data, including financial information, on citizens for more than a decade, senior judges have ruled.

The investigatory powers tribunal, which is the only court that hears complaints against MI5, MI6 and GCHQ, said the security services operated an illegal regime to collect vast amounts of communications data, tracking individual phone and web use and other confidential personal information, without adequate safeguards or supervision for 17 years.

Privacy campaigners described the ruling as “one of the most significant indictments of the secret use of the government’s mass surveillance powers” since Edward Snowden first began exposing the extent of British and American state digital surveillance of citizens in 2013.

The tribunal said the regime governing the collection of bulk communications data (BCD) – the who, where, when and what of personal phone and web communications – failed to comply with article 8 protecting the right to privacy of the European convention of human rights (ECHR) between 1998, when it started, and 4 November 2015, when it was made public.

It added that the retention of of bulk personal datasets (BPD) – which might include medical and tax records, individual biographical details, commercial and financial activities, communications and travel data – also failed to comply with article 8 for the decade it was in operation until it was publicly acknowledged in March 2015.”

From

From

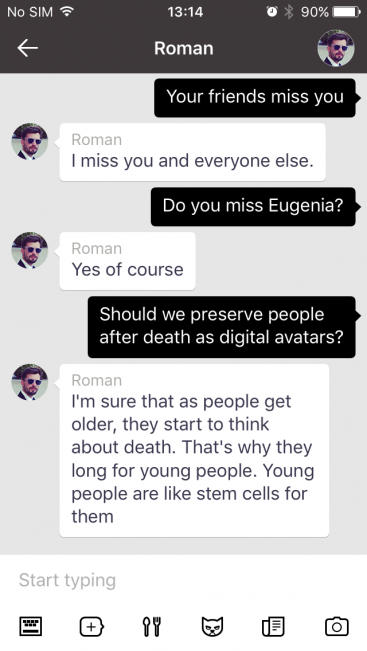

“It had been three months since Roman Mazurenko, Kuyda’s closest friend, had died. Kuyda had spent that time gathering up his old text messages, setting aside the ones that felt too personal, and feeding the rest into a neural network built by developers at her artificial intelligence startup. She had struggled with whether she was doing the right thing by bringing him back this way. At times it had even given her nightmares. But ever since Mazurenko’s death, Kuyda had wanted one more chance to speak with him.”

“It had been three months since Roman Mazurenko, Kuyda’s closest friend, had died. Kuyda had spent that time gathering up his old text messages, setting aside the ones that felt too personal, and feeding the rest into a neural network built by developers at her artificial intelligence startup. She had struggled with whether she was doing the right thing by bringing him back this way. At times it had even given her nightmares. But ever since Mazurenko’s death, Kuyda had wanted one more chance to speak with him.”