“Are you happy now? The uncertain future of emotion analytics”

Elise Thomas writes at Hopes & Fears:

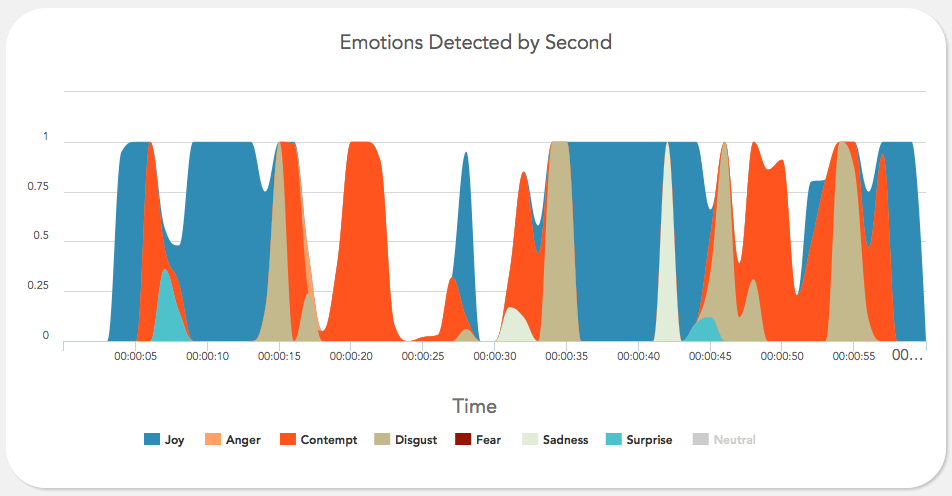

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

Corporations spend billions each year trying to build “authentic” emotional connections to their target audiences. Marketing research is one of the most prolific research fields around, conducting thousands of studies on how to more effectively manipulate consumers’ decision-making. Advertisers are extremely interested in affective computing and particularly in a branch known as emotion analytics, which offers unprecedented real-time access to consumers’ emotional reactions and the ability to program alternative responses depending on how the content is being received.

For example, if two people watch an advertisement with a joke and only one person laughs, the software can be programmed to show more of the same kind of advertising to the person who laughs while trying different sorts of advertising on the person who did not laugh to see if it’s more effective. In essence, affective computing could enable advertisers to create individually-tailored advertising en masse.”

“Say 15 years from now a particular brand of weight loss supplements obtains a particular girl’s information and locks on. When she scrolls through her Facebook, she sees pictures of rail-thin celebrities, carefully calibrated to capture her attention. When she turns on the TV, it automatically starts on an episode of “The Biggest Loser,” tracking her facial expressions to find the optimal moment for a supplement commercial. When she sets her music on shuffle, it “randomly” plays through a selection of the songs which make her sad. This goes on for weeks.

Now let’s add another layer. This girl is 14, and struggling with depression. She’s being bullied in school. Having become the target of a deliberate and persistent campaign by her technology to undermine her body image and sense of self-worth, she’s at risk of making some drastic choices.”