How the “Math Men” Overthrew the “Mad Men”

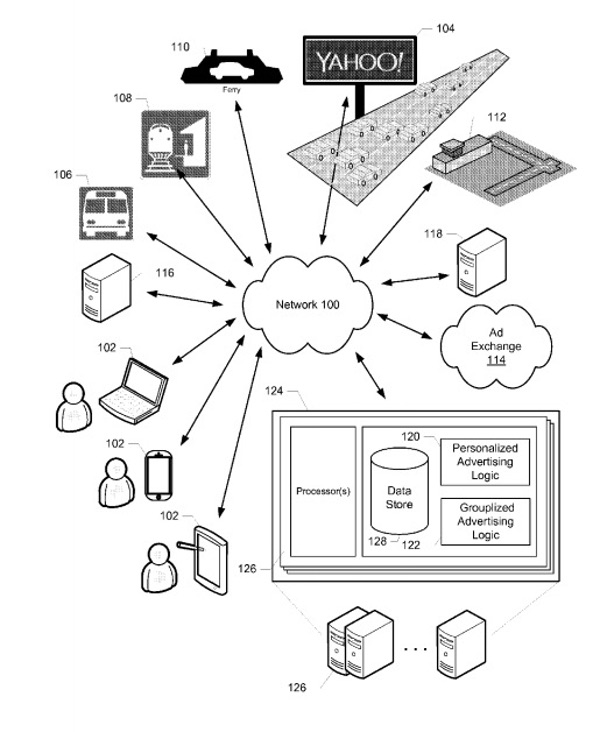

Once, Mad Men ruled advertising. They’ve now been eclipsed by Math Men — the engineers and data scientists whose province is machines, algorithms, pureed data, and artificial intelligence. Yet Math Men are beleaguered, as Mark Zuckerberg demonstrated when he humbled himself before Congress, in April. Math Men’s adoration of data — coupled with their truculence and an arrogant conviction that their ‘science’ is nearly flawless — has aroused government anger, much as Microsoft did two decades ago.

The power of Math Men is awesome. Google and Facebook each has a market value exceeding the combined value of the six largest advertising and marketing holding companies. Together, they claim six out of every ten dollars spent on digital advertising, and nine out of ten new digital ad dollars. They have become more dominant in what is estimated to be an up to two-trillion-dollar annual global advertising and marketing business. Facebook alone generates more ad dollars than all of America’s newspapers, and Google has twice the ad revenues of Facebook.

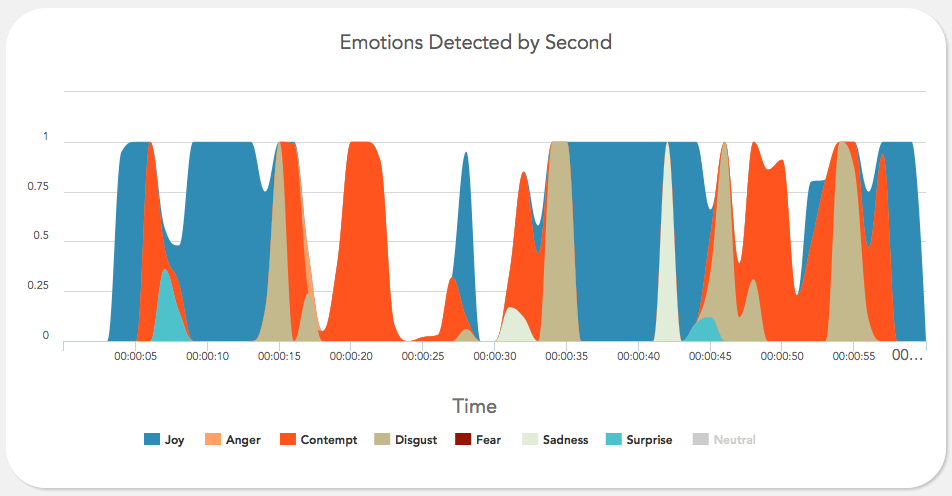

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

For two weeks this past spring, some shoppers at the Westfield Stratford shopping mall in the United Kingdom were followed by a homeless dog appearing on electronic billboards. The roving canine, named Barley, was part of an RFID-based advertisement campaign conducted by Ogilvy on behalf of the Battersea Dogs and Cats Home, a rehabilitation and adoption organization for stray animals. The enabling technology was provided by Intellifi, and was installed by U.K.-based RFID consultancy RFIDiom.

For two weeks this past spring, some shoppers at the Westfield Stratford shopping mall in the United Kingdom were followed by a homeless dog appearing on electronic billboards. The roving canine, named Barley, was part of an RFID-based advertisement campaign conducted by Ogilvy on behalf of the Battersea Dogs and Cats Home, a rehabilitation and adoption organization for stray animals. The enabling technology was provided by Intellifi, and was installed by U.K.-based RFID consultancy RFIDiom.