Tooth-mounted trackers could be the next fitness wearables

Tooth-mounted trackers that keep a tally on how much food and calories people consume could be the next frontier in fitness monitoring. A research team is already experimenting with the tooth sensors at the Tufts University Biomedical Engineering Department.

“We have extended common RFID [radiofrequency ID] technology to a sensor package that can dynamically read and transmit information on its environment, whether it is affixed to a tooth, to skin, or any other surface,” Fiorenzo Omenetto, Ph.D., corresponding author and the Frank C. Doble Professor of Engineering at Tufts said in a statement.

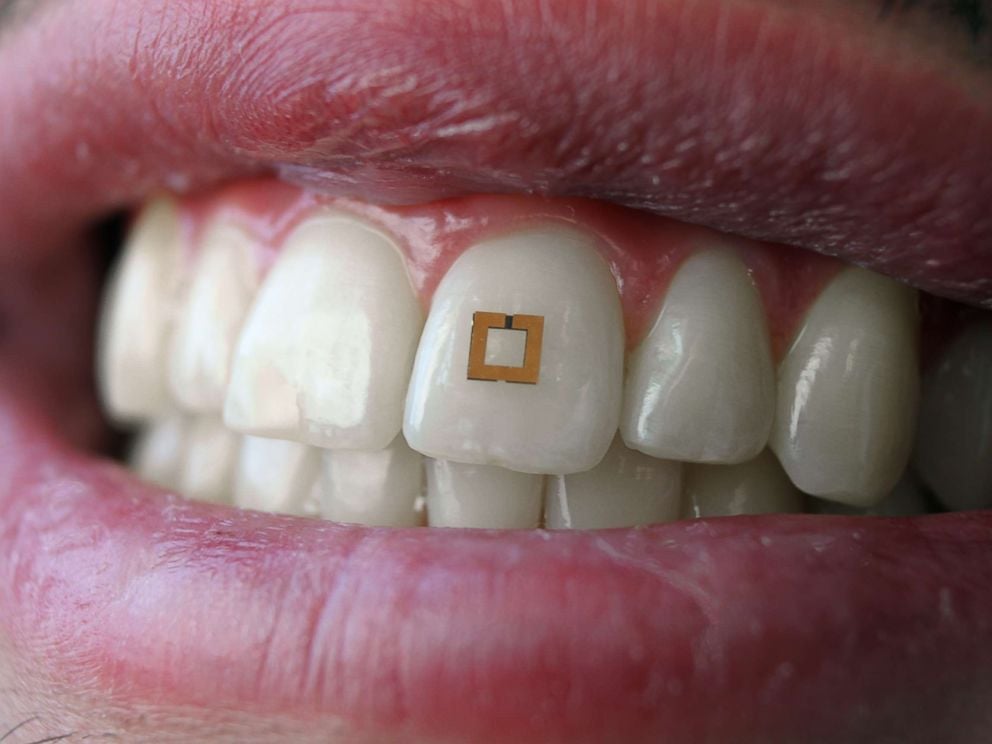

The sensors looks like custom microchips stuck to the tooth. They are flexible, tiny squares — ranging from 4 mm by 4 mm to an even smaller size of about 2 mm by 2 mm — that are applied directly to human teeth. Each one has three active layers made of titanium and gold, with a middle layer of either silk fibers or water-based gels.

In small-scale studies, four human volunteers wore sensors, which had silk as the middle “detector” layer, on their teeth and swished liquids around in their mouths to see if the sensors would function. The researchers were testing for sugar and for alcohol.

The tiny squares successfully sent wireless signals to tablets and cell phone devices.

In one of their first experiments, the chip could tell the difference between solutions of purified water, artificial saliva, 50 percent alcohol and wood alcohol. It would then wirelessly signal to a nearby receiver via radiofrequency, similar to how EZ Passes work…