Leaked files reveal scope of Cellebrite’s phone cracking technology

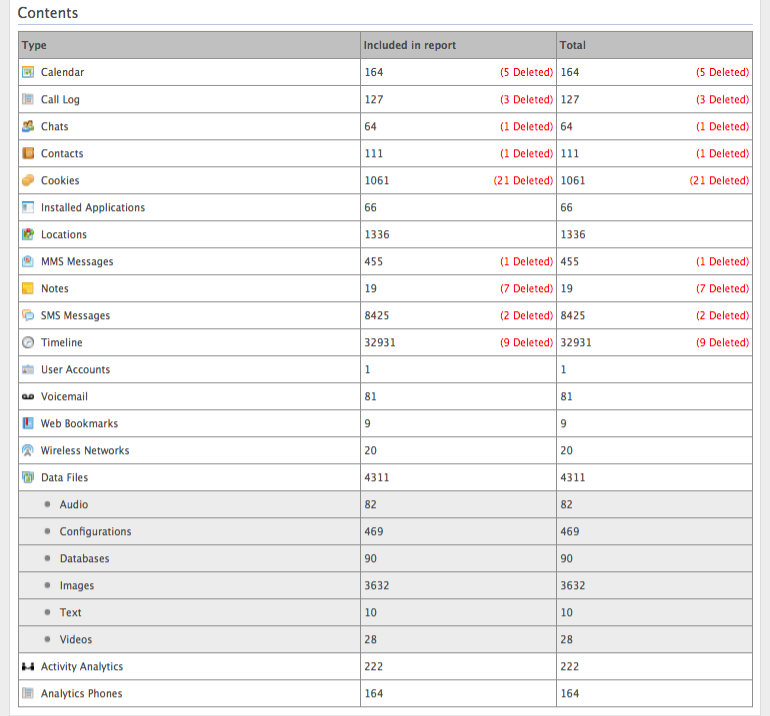

“Earlier this year, [ZDNet was] sent a series of large, encrypted files purportedly belonging to a U.S. police department as a result of a leak at a law firm, which was insecurely synchronizing its backup systems across the internet without a password. Among the files was a series of phone dumps created by the police department with specialist equipment, which was created by Cellebrite, an Israeli firm that provides phone-cracking technology. We obtained a number of these so-called extraction reports. One of the more interesting reports by far was from an iPhone 5 running iOS 8. The phone’s owner didn’t use a passcode, meaning the phone was entirely unencrypted. The phone was plugged into a Cellebrite UFED device, which in this case was a dedicated computer in the police department. The police officer carried out a logical extraction, which downloads what’s in the phone’s memory at the time. (Motherboard has more on how Cellebrite’s extraction process works.) In some cases, it also contained data the user had recently deleted. To our knowledge, there are a few sample reports out there floating on the web, but it’s rare to see a real-world example of how much data can be siphoned off from a fairly modern device. We’re publishing some snippets from the report, with sensitive or identifiable information redacted.”

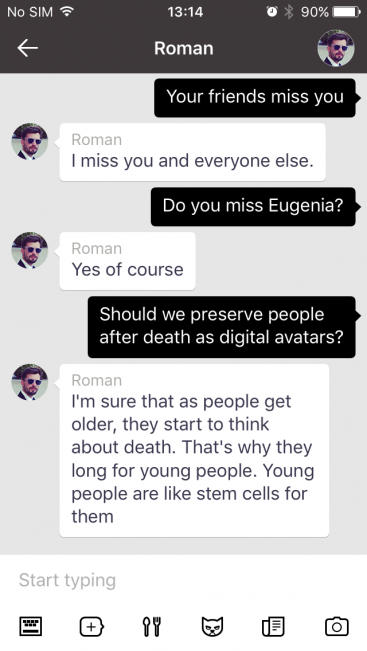

“It had been three months since Roman Mazurenko, Kuyda’s closest friend, had died. Kuyda had spent that time gathering up his old text messages, setting aside the ones that felt too personal, and feeding the rest into a neural network built by developers at her artificial intelligence startup. She had struggled with whether she was doing the right thing by bringing him back this way. At times it had even given her nightmares. But ever since Mazurenko’s death, Kuyda had wanted one more chance to speak with him.”

“It had been three months since Roman Mazurenko, Kuyda’s closest friend, had died. Kuyda had spent that time gathering up his old text messages, setting aside the ones that felt too personal, and feeding the rest into a neural network built by developers at her artificial intelligence startup. She had struggled with whether she was doing the right thing by bringing him back this way. At times it had even given her nightmares. But ever since Mazurenko’s death, Kuyda had wanted one more chance to speak with him.”

From

From