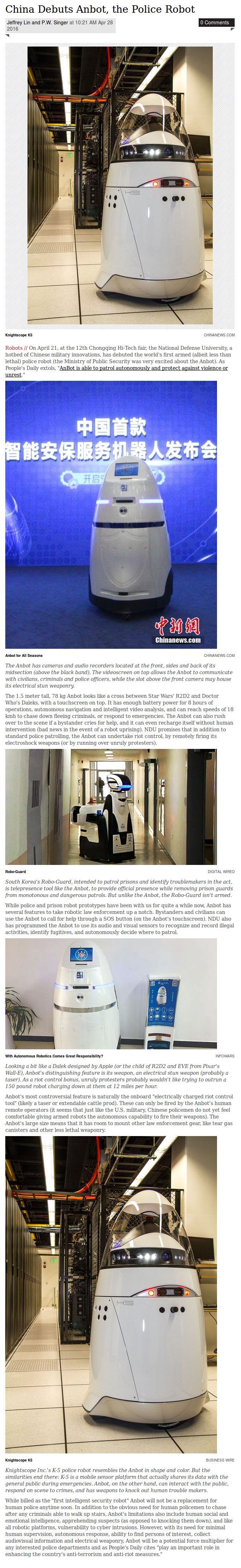

“RoboCop” deployed to Silicon Valley shopping centre

At the Stanford shopping center in Palo Alto, California, there is a new sheriff in town – and it’s an egg-shaped robot.

“Everyone likes to take robot selfies,” Stephens said. “People really like to interact with the robot.” He said there have even been two instances where the company found lipstick marks on the robot where people had kissed the graffiti-resistant dome.

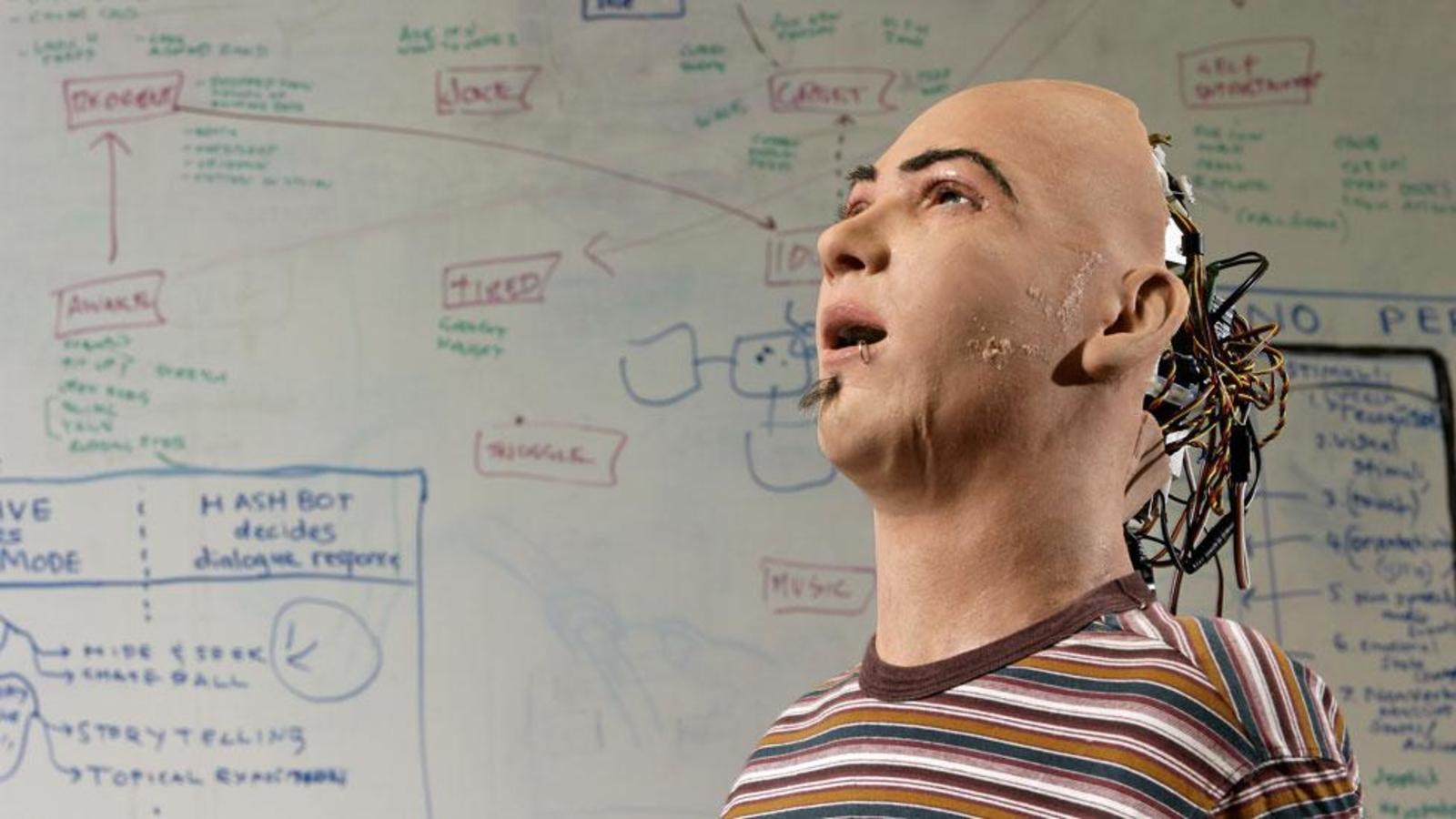

The slightly comical Dalek design was intentional…”