Algorithms viewed as ‘unfair’ by consumers

The US-based Pew Research Center has found the American public is growing increasingly distrustful of the use of computer algorithms in a variety of sectors, including finance, media and the justice system.

A report released over the weekend found that a broad section of those surveyed feel that computer programs will always reflect some level of human bias, that they might violate privacy, fail to capture the nuance of human complexity or simply be unfair.

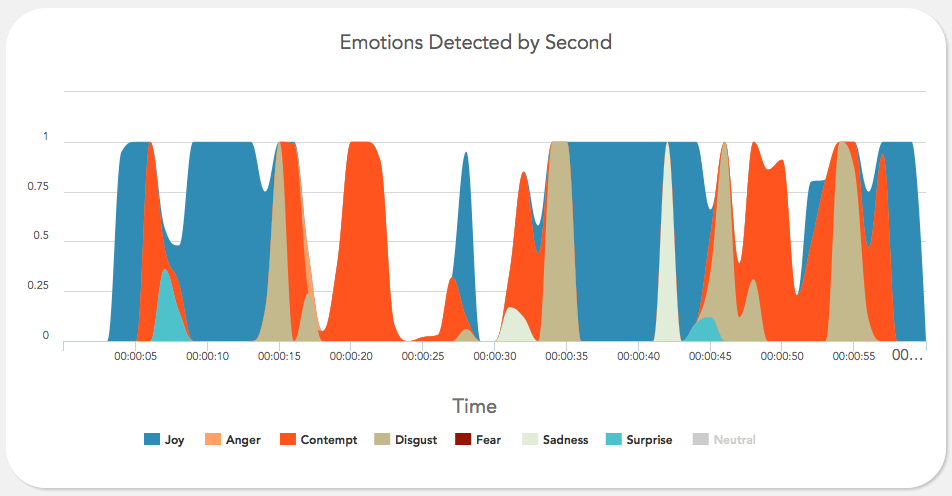

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”