Facebook Threatens To Cut Off Australians From Sharing News

The threat escalates an antitrust battle between Facebook and the Australian government, which wants the social-media giant and Alphabet’s Google to compensate publishers for the value they provide to their platforms. The legislation still needs to be approved by Australia’s parliament. Under the proposal, an arbitration panel would decide how much the technology companies must pay publishers if the two sides can’t agree. Facebook said in a blog posting Monday that the proposal is unfair and would allow publishers to charge any price they want. If the legislation becomes law, the company says it will take the unprecedented step of preventing Australians from sharing news on Facebook and Instagram.

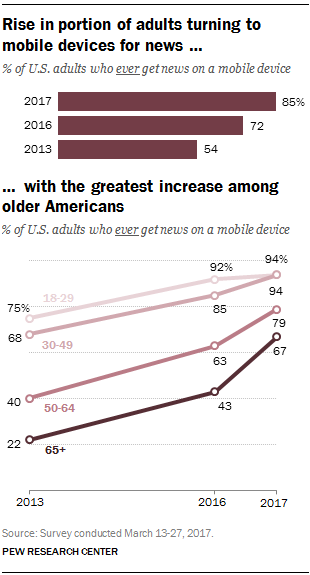

Mobile devices have rapidly become one of the most common ways for Americans to get news, and the sharpest growth in the past year has been among Americans ages 50 and older, according to a

Mobile devices have rapidly become one of the most common ways for Americans to get news, and the sharpest growth in the past year has been among Americans ages 50 and older, according to a

From

From