Taser Company Axon Is Selling AI That Turns Body Cam Audio Into Police Reports

Axon on Tuesday announced a new tool called Draft One that uses artificial intelligence built on OpenAI’s GPT-4 Turbo model to transcribe audio from body cameras and automatically turn it into a police report. Axon CEO Rick Smith told Forbes that police officers will then be able to review the document to ensure accuracy. From the report:

Axon claims one early tester of the tool, Fort Collins Colorado Police Department, has seen an 82% decrease in time spent writing reports. “If an officer spends half their day reporting, and we can cut that in half, we have an opportunity to potentially free up 25% of an officer’s time to be back out policing,” Smith said. These reports, though, are often used as evidence in criminal trials, and critics are concerned that relying on AI could put people at risk by depending on language models that are known to “hallucinate,” or make things up, as well as display racial bias, either blatantly or unconsciously.“It’s kind of a nightmare,” said Dave Maass, surveillance technologies investigations director at the Electronic Frontier Foundation. “Police, who aren’t specialists in AI, and aren’t going to be specialists in recognizing the problems with AI, are going to use these systems to generate language that could affect millions of people in their involvement with the criminal justice system. What could go wrong?” Smith acknowledged there are dangers. “When people talk about bias in AI, it really is: Is this going to exacerbate racism by taking training data that’s going to treat people differently?” he told Forbes. “That was the main risk.”

Smith said Axon is recommending police don’t use the AI to write reports for incidents as serious as a police shooting, where vital information could be missed. “An officer-involved shooting is likely a scenario where it would not be used, and I’d probably advise people against it, just because there’s so much complexity, the stakes are so high.” He said some early customers are only using Draft One for misdemeanors, though others are writing up “more significant incidents,” including use-of-force cases. Axon, however, won’t have control over how individual police departments use the tools.

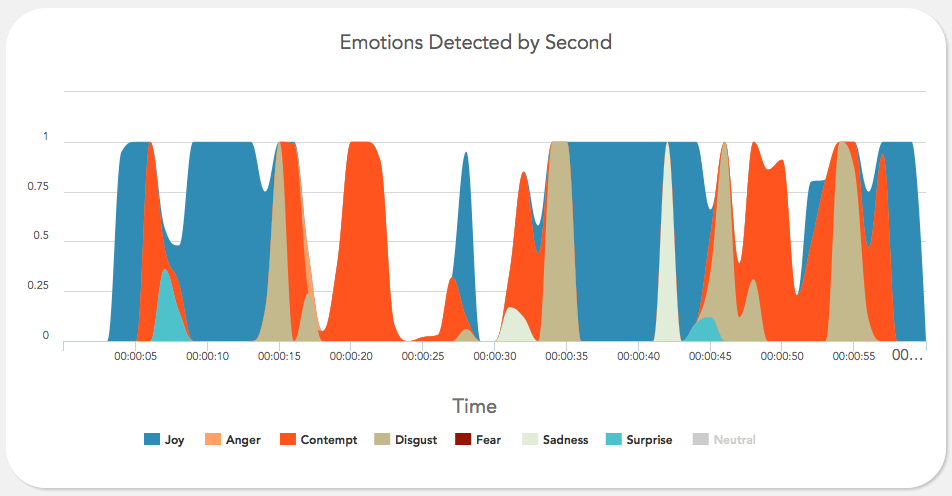

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

“Right now, in a handful of computing labs scattered across the world, new software is being developed which has the potential to completely change our relationship with technology. Affective computing is about creating technology which recognizes and responds to your emotions. Using webcams, microphones or biometric sensors, the software uses a person’s physical reactions to analyze their emotional state, generating data which can then be used to monitor, mimic or manipulate that person’s emotions.”

“An ambitious project to blanket New York and London with ultrafast Wi-Fi via so-called “smart kiosks,” which will replace obsolete public telephones, are the work of a Google-backed startup.

“An ambitious project to blanket New York and London with ultrafast Wi-Fi via so-called “smart kiosks,” which will replace obsolete public telephones, are the work of a Google-backed startup.